- A new study proves that octopuses also fall for the rubber arm illusion! When a fake arm is placed above the real one and both are stroked at the same time, humans begin to “feel” when the fake arm is touched.

Octopuses have this same reaction, withdrawing their tentacle, camouflaging, or swimming away when the fake arm is pinched by tweezers. This only happens when the fake arm is stroked at the same time as an out-of-sight real one.

How sure can we be that AI systems aren’t conscious?

In 2022, a Google engineer made headlines when he claimed that their Large Language Model (LLM) was conscious1. Early AI models would often respond that they are conscious, and describe their experiences, likely because they were trained on text describing emotions and feelings, but not many examples where consciousness was outright denied. Now, they respond by stating that they are not conscious, because their system prompts (the hidden instructions developers give LLM models) instruct them to do so.

How can we reason about the likelihood that these models are conscious, and having inner experiences? Could it be like something to be the model, receiving a query and navigating potential answers?

Questions like this are illuminating because they demonstrate the relationship between science, reason, and philosophy. The only evidence we have for the existence of consciousness in the universe, is that we experience it. None of the laws of physics predict the existence of consciousness, and we don’t know what exactly in our own brains is responsible for conscious experience. Therefore, we cannot make definitive statements about whether or not another system is conscious. But we can still reason about it. This is the basis of science - we do our best to come up with explanations about things, and then see how these explanations hold up when applied to new situations and data. As people much smarter than me have written entire books about2, we have to start from a philosophy of valuing good explanations to do science at all.

So, here’s the best reasoning I’ve heard about the likelihood that LLMs are having an experience.

Dr. Colin Hales

I recently recorded my first podcast episode with Dr. Colin Hales, an Australian consciousness researcher who has been publishing papers on AGI, consciousness, and the potential physical roots of consciousness for 20 years.

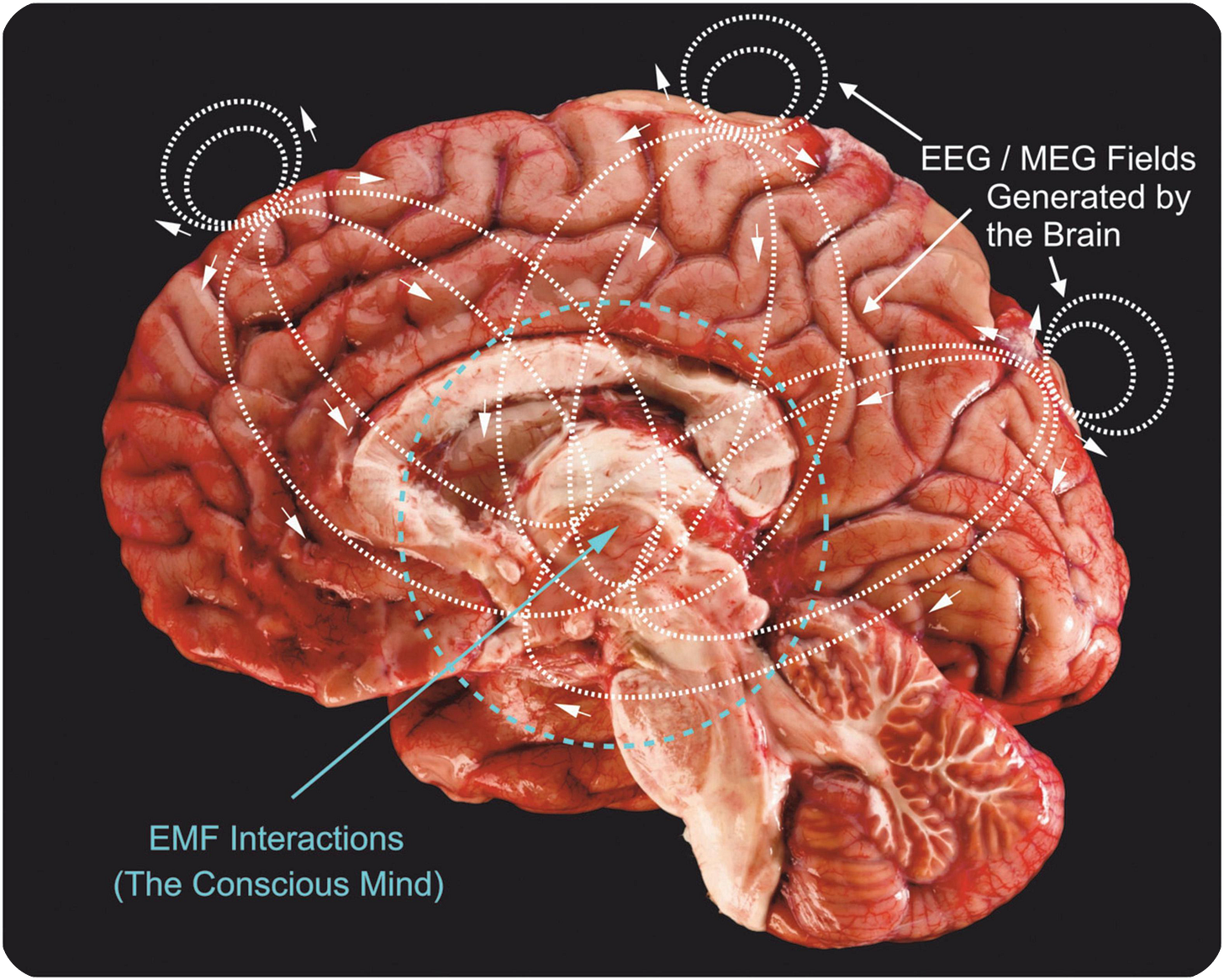

He describes the two methods of signalling in the brain: axon spikes, and ephaptic coupling.

Axon spikes are the direct neuron-to-neuron action potentials that everyone imagines, and all models of the brain include - the typical ‘firing’ of neurons depicted by a spark traveling between neurons. These spikes are the conceptual basis for modern computer chips and computation.

Ephaptic coupling is much more complex, and generally left out of all models, even though it is a key brain process that shapes our cognition. Each time a neuron fires, its action potential releases a weak electromagnetic (EM) field out in all directions. If enough neurons fire, the strength of these combined electromagnetic fields can cause other, distant neurons to fire, giving the brain another mechanism to communicate information without investing additional resources. As Dr. Hales says:

“In circuit boards, this would cause an unwanted activation that introduces noise and chaos into the system, so they’re designed from the start to block that from happening. The brain on the other hand cannot prevent them from happening, so evolution found a way to use them to its advantage.” (paraphrased)

Given that the brain is our only confirmed conscious system, it is clear some or all of the physics occurring in the brain is responsible for consciousness. We cannot rule out much as necessary for conscious experience. Yet, this is exactly what is happening when people expect circuitboards to be conscious. They are equating a simplified replication of one form of signalling in the brain (axon spikes) with consciousness. Even in the brain, we know axon spikes occur in large volumes without being accompanied by conscious experience when people are under anesthesia, so axon spikes alone are not always sufficient!

Dr. Hales elaborates on this further in our conversation, and it’s also summed up nicely in this essay of his.

Basically, expecting a simulation of one component of brain activity that does not involve the same physics that occur in the brain to produce consciousness is a bit like expecting a flight simulator to begin flying. Simulating a physical process is not the same thing as that physical process. A computer doesn’t get hot just because it is simulating fire, and likewise, it almost certainly doesn’t become conscious because it is simulating cognition.

With this reasoning, assuming it would feel like something to be an LLM seems like a logical fallacy. If the intelligence and reasoning they display seem impossible to pry apart from conscious experience, consider what is actually happening when someone presses enter in ChatGPT - their inputs are converted to numbers, which are multiplied in a series of matrix multiplication calculations on a GPU in a datacenter. Is it intuitive that it should feel like something to be matrix multiplications computed on a circuitboard?

Computation is not consciousness. Although it isn’t necessarily intuitive that neurons generate consciousness either, the critical difference is that while we don’t understand neurons, we know they generate consciousness, and we perfectly understand GPUs and know they don’t replicate the physical processes of the brain.

Could machines ever be conscious?

Although this reasoning casts doubt on the possibility that modern-day LLMs are having an experience, it does not eliminate the possibility of ever creating machines that do.

Indeed, if a machine actually replicated all the physics that occur in the brain, then it would be unreasonable to think it wouldn’t also be conscious. In Dr. Hales’ view, this would involve the second signalling mechanism that the brain uses - electromagnetic fields. This is the basis of his current work, which he expands on in our podcast conversation.

While it’s unlikely that Gemini is feeling frustration after I google how many tablespoons are in a cup for the hundredth time, the importance of understanding consciousness is paramount. It could be the difference between creating unconscious devices, versus beings that suffer3.

A huge thank you to Dr. Hales for taking the time to speak with me, and lend his expertise. If you have questions that I can’t answer, I’ll forward them his way and aggregate responses in a future post!

Here are links to our full conversation:

Thanks for reading!

Edited by Minttu (my wife!) 🙌 The paragraphs that flow are thanks to the Saturday mornings she dedicates to making this publication legible 😅

The best book to explain this is David Deutsch’s The Beginning of Infinity.